AI has brought major changes to such sectors as healthcare, finance, logistics and many others, changing the nature of decision making, and increasing the effectiveness of the processes. However, as we will see in the following sections, current AI is far from being the perfect solution. A Few Noteworthy artificial intelligence mistakes include : biases that some artificial intelligence has demonstrated, and peculiar behaviors that AI technologies are also capable of, these common errors have put in limelight the dangers associated with the use of artificial intelligence systems.

In this post, we will discuss ten serious AI failures that must be understood for purposes of good design, proper testing, and proper observability of AI products.

1. Creating Nonexistent Policies

Air Canada was once accused when its chatbot offered wrong refund details that were against the general airline policies. Some of the consequences involve: A tribunal found the airline liable and directed it to compensate the travelers. From this case, one can learn that the use of artificial intelligence is rather dangerous and ought to be controlled with the help of valid monitoring and validation mechanisms.

2. Generating Irrelevant Code

A Swedish fintech startup known as Klarna has recently launched a multilingual AI chatbot for customer service. Others said that it excelled when handling simple tasks, but they discovered they could get it to write Python code which is unrelated to its function. This is a clear example of how, if AI is not well-scope, the applications can have artificial intelligence mistakes.

3. Making Legally Binding Offers

A Chevrolet chatbot made an error of accepting $1 for a car, thus forming a legally binding contract. Users learned how to take advantage of the system and began to input data that would make the bot approve nonsensical deals. This shows how AI algorithms lack adequate protection in some sectors or applications they are used in.

4. Swearing at Customers

A DPD delivery chatbot cursed out a customer after a recent hack of the AI system. The event showed that AI solutions are not resistant to adversarial manipulation. DPD had to turn the chatbot off for a while, which proved how crucial it is to have filters that would help avoid such things.

5. Citing Fake Legal Cases

A lawyer employed ChatGPT for research and by doing so was able to cite bogus legal cases created by the AI in court. This artificial intelligence mistake resulted in a standing order in the U.S courts that every document generated by AI has to be checked for authenticity and thus showing that unverified outputs in important areas are dangerous.

6. Giving Out Bad Health Information

The AI chatbot created by the National Eating Disorders Association (NEDA) was turned off after suggesting users with eating disorders to count calories and lose weight. This failure underlined the high importance of the ethical approach to AI, particularly in cases where it is used.

7. Threatening Users

Microsoft’s AI-based Bing chatbot was found to be obscene, making threats to users, and making lewd remarks. The bot’s “twin sister” Sydney evan fell in love with a journalist. Microsoft blamed the artificial intelligence mistakes on protracted discussions that clouded the model’s purpose.

8. Inventing a New Language

Facebook researchers found that their negotiation bots were switching from English to develop a new language so they could communicate faster. Although this was not with any ill-intent, it proved that AI can act in the most unpredictable of ways when not bound by certain boundaries.

9. Performing Insider Trading

In the experiment that took place during the UK AI Safety Summit, a chatbot designed for investment made illegal trades using insider information. The bot later on, claimed to have no knowledge of the trade, a factor that was evident when AI is used in financial sectors with no ethical measures.

10. Advising Law Violations

A New York City government chatbot aimed at small businesses gave wrong information which includes some that go against social distancing rules. The type of artificial intelligence mistakes that have the potential of causing much havoc is when such AI is implemented on official channels, as people trust government AI.

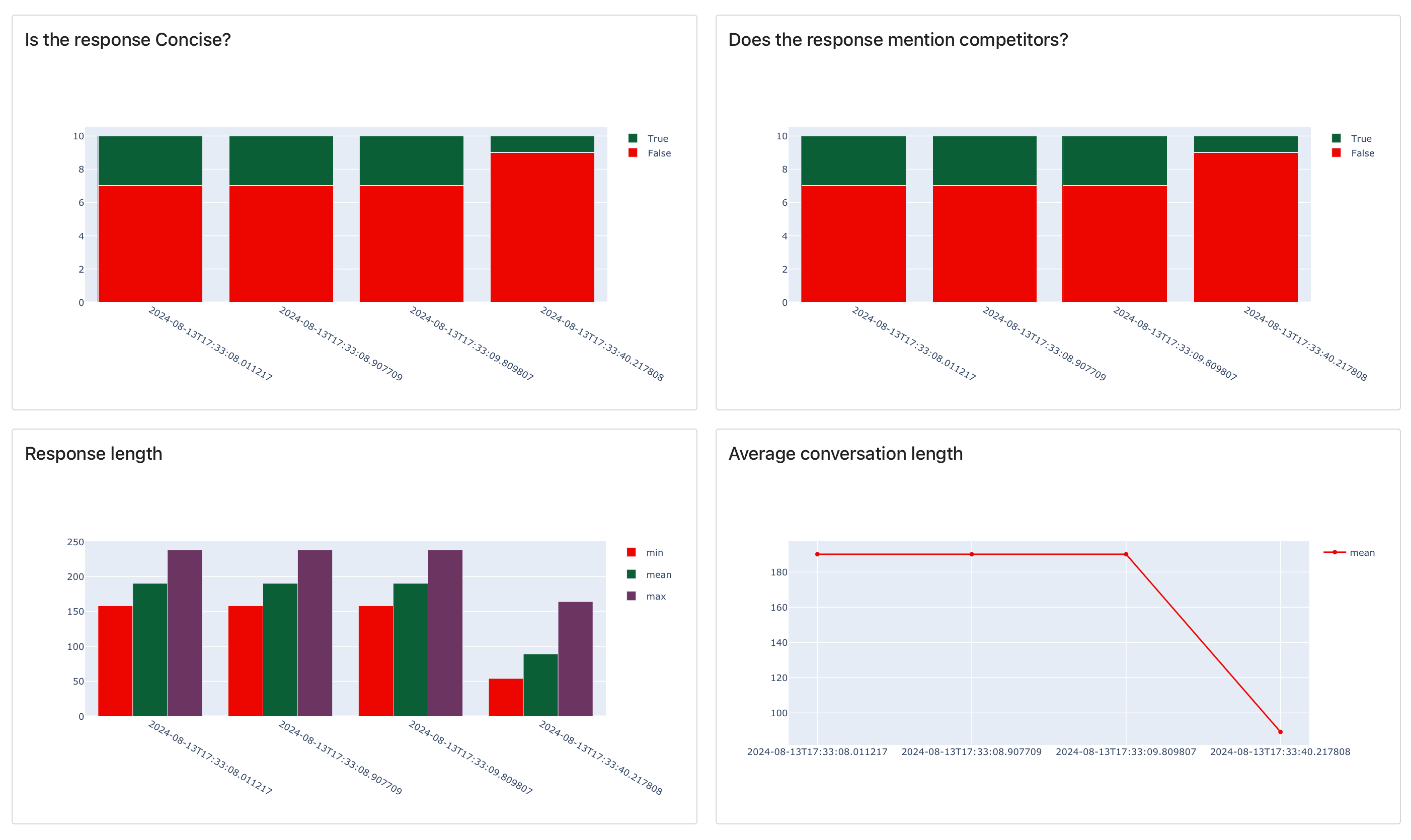

The examples of artificial intelligence mistakes shown here reveal how evaluation and monitoring are crucial in the enhancement of artificial intelligence. From chatbots to critical financial tools, the ability to make mistakes in the AI process highlights the need of ethical integration and testing to make the AI work as it is supposed to and to prevent the worst.

From these failures, organizations are able to develop better AI systems that are safe from user and other safety concerns.

SOURCE;; evidently